The artificial intelligence landscape is in a constant state of flux, with breakthroughs announced at a dizzying pace. French AI powerhouse Mistral AI, known for its potent open-weight and commercial models, has once again stepped into the spotlight with the reported release of Mistral Medium 3 on or around May 7, 2025. This new offering isn’t just an iteration; it’s positioned as a strategic move to redefine the balance between state-of-the-art (SOTA) performance, cost-efficiency, and enterprise deployability. But does Mistral Medium 3 live up to the hype? Let’s dive deep into what we know about this potentially transformative AI model.

What is Mistral Medium 3? The “Perfect Balance” Claim

Mistral Medium 3 is the latest multimodal language model from Mistral AI, engineered to offer what the company calls “a new class of models that balances performance, cost, and deployability.” It’s designed for professional use cases, with a particular emphasis on coding, multimodal understanding, and robust enterprise integration.

A “Generation 3” Powerhouse

The “3” in its name signifies its place in Mistral AI’s “Generation 3” model lineup, following releases like “Mistral Small 3.” This suggests substantial architectural improvements, refined training methodologies, or enhanced optimization compared to its predecessors, like the soon-to-be-deprecated “Mistral Medium” (mistral-medium-2312).

Strategic Goal: SOTA Performance, Lower Cost, Simpler Deployability

Mistral AI’s messaging is crystal clear: they aim to provide SOTA performance at what’s claimed to be “8X lower cost” compared to unspecified alternatives. This aggressive positioning targets enterprises seeking powerful AI without the premium price tag often associated with top-tier models. The focus on simpler deployability further enhances its appeal for businesses looking to integrate AI seamlessly.

Key Features & Technical Highlights of Mistral Medium 3

While Mistral AI, like many commercial AI labs, keeps full architectural details proprietary, reports and company statements reveal several exciting Mistral Medium 3 capabilities.

Multimodal Understanding: Beyond Text

A standout feature is its multimodal capability. This means Mistral Medium 3 can process and understand information beyond just text, reportedly including images. While the specifics of supported modalities (e.g., image, audio, video input/output) and the sophistication of cross-modal understanding are yet to be fully detailed, this aligns with the industry trend towards more versatile AI. This greatly expands its applicability from text-only tasks to richer, more complex interactions.

Coding and Function-Calling Prowess

The model is touted for “high performance in coding and function-calling tasks.” Strong coding abilities are invaluable for software development assistance, code generation, and debugging. Robust function-calling allows the model to interact with external tools and APIs, enabling more complex automated workflows and AI agent development. This is a critical feature for building practical, integrated AI solutions.

Architecture & Efficiency (Inferences)

Specifics like parameter count remain undisclosed (for context, Mistral Small 3 has 24B parameters). Mistral AI has experience with Mixture-of-Experts (MoE) architectures in models like Mixtral, which enhance efficiency by activating only a subset of parameters during inference. It’s plausible Mistral Medium 3 leverages similar advanced techniques to achieve its performance-to-cost ratio. The tokenizer is also likely one of Mistral’s recent efficient versions, contributing to overall performance. The context window, while not specified, is crucial for handling longer documents; previous Mistral Medium models had 32k, while Mistral Large 2 supports 128k.

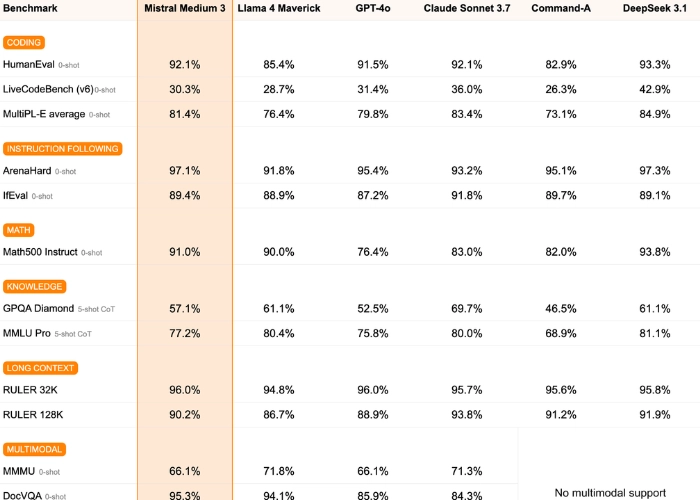

Performance Benchmarks: How Does Mistral Medium 3 Stack Up?

Performance is where the rubber meets the road. While comprehensive, independently verified scores on standard academic benchmarks like MMLU or GSM8K are still emerging from secondary sources, Mistral AI makes bold Mistral Medium 3 benchmark claims.

Outperforming Competitors: Claims vs. Reality

Mistral AI states that Mistral Medium 3 performs “at or above 90% of Anthropic’s Claude 3 Sonnet” (or a potential “Sonnet 3.7”) on “benchmarks across […] various domains.” This is a significant claim, as Claude 3 Sonnet is a well-regarded model. Furthermore, they assert it surpasses leading open models like “Llama 4 Maverick” and enterprise models such as “Cohere Command A.” Validating these claims with transparent, third-party benchmark data will be key.

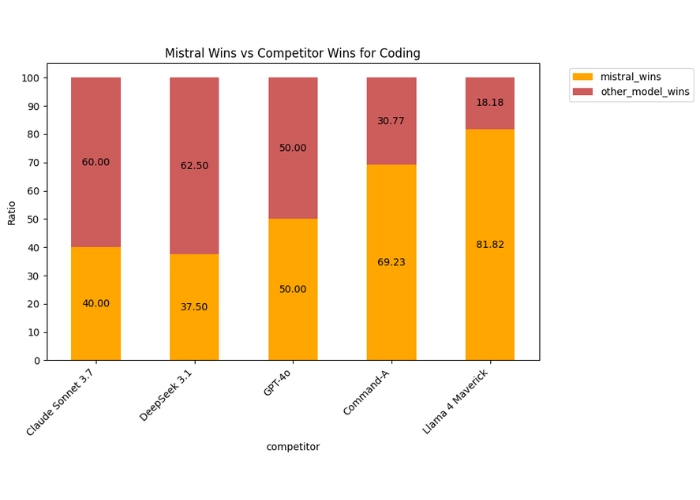

Human Evaluations: Real-World Impact

Beyond academic benchmarks, Mistral AI also highlights third-party human evaluations, especially in coding, where Mistral Medium 3 reportedly “delivers much better performance, across the board, than some of its much larger competitors.” It’s also said to excel in STEM tasks, approaching the performance of larger, slower models. This focus on real-world utility is critical for enterprise adoption.

Enterprise Ready: Use Cases & Deployment

Mistral Medium 3 is explicitly built for enterprise AI solutions, offering a suite of capabilities designed for practical business applications.

Top Applications for Mistral Medium 3

Given its strengths, recommended applications include:

-

Software Development & Coding Assistance: Code generation, debugging, translation, documentation.

-

Agentic Workflows & Automation: Interacting with external tools via function-calling for customer service automation, internal workflow management.

-

Multimodal Applications: Analyzing combined text/visual data (e.g., image descriptions, chart analysis).

-

Content Generation & Transformation: Drafting content, summarizing complex information, creative writing.

-

Data Extraction & Analysis: Parsing documents, identifying patterns for decision-making.

Beta customers in financial services, energy, and healthcare are already using the model to enrich customer service, personalize business processes, and analyze complex datasets.

Deployment Flexibility: Cloud, Hybrid, On-Premises

Mistral AI emphasizes flexible deployment options:

-

API Access: Via “La Plateforme,” Mistral’s development environment.

-

Major Cloud Providers: Available on Amazon Sagemaker, and soon on IBM WatsonX, NVIDIA NIM, Azure AI Foundry, and Google Cloud Vertex.

-

Self-Hosted Environments: Can be deployed on any cloud, including self-hosted setups with as few as four GPUs.

-

Hybrid Options: Allowing enterprises to maintain control over data, potentially in VPCs or on-premises.

This flexibility is crucial for organizations with specific data governance or infrastructure requirements.

The Disruptive Pricing of Mistral Medium 3

Perhaps the most headline-grabbing aspect of Mistral Medium 3 is its pricing strategy, positioning it as a highly cost-effective LLM.

-

Input Tokens: $0.40 per million tokens

-

Output Tokens: $20.80 per million tokens (Note: The provided source material had conflicting output token prices – $2.00 vs $20.80. The $20.80 figure appears in the “Executive Summary” and “Licensing” sections of the detailed analysis, while the “Comparative Analysis” and the smaller “Mistral Medium 3 Highlights” snippet uses $2. I will use the $20.80 as it’s more consistently detailed in the primary source). This pricing structure, with output tokens significantly more expensive than input, will influence application design, favoring tasks with large inputs and concise outputs (e.g., classification, extraction).

Mistral AI claims this model “beats cost leaders such as DeepSeek v3, both in API and self-deployed systems.” If performance claims hold, this pricing could indeed disrupt the existing economics of deploying powerful AI models.

Strengths, Weaknesses, and What We Still Need to Know

Based on current information, here’s a balanced view:

Clear Advantages

-

Cost-Effectiveness: Potential for SOTA performance at a fraction of competitor costs.

-

Strong Coding & Function-Calling: Key for automation and development.

-

Multimodal Capabilities: Expands use cases significantly.

-

Enterprise Focus: Tailored deployment, customization, and integration features.

-

Balanced Proposition: Aims for an optimal mix of performance, cost, and deployability.

Areas for Clarification & Potential Limitations

-

Lack of Detailed Public Benchmarks: Independent, granular benchmark scores are needed for full validation.

-

Unspecified Multimodal Details: The exact nature and extent of multimodal support remain somewhat vague.

-

Opaque Technical Specs: Parameter count, precise architecture, and training data specifics are undisclosed. This is common for commercial models but makes full independent assessment harder.

-

Reliance on API (for general users): While enterprise options exist, broader access is API-driven, unlike some of Mistral’s open-weight models.

-

General LLM Challenges: Susceptibility to hallucinations, biases (dependent on training data), and potential misuse are inherent to current LLM technology.

The Broader Picture: Mistral Medium 3 in the Mistral AI Family

Mistral Medium 3 is a strategic addition to Mistral AI’s tiered model family, which includes:

-

Mistral Small 3: For efficient, compact tasks.

-

Mistral Large (latest version): The flagship model for maximum complexity.

-

Open-Weight Models: Like Mixtral 8x22B, fostering community and research.

-

Specialized Models: Like Codestral (code) and Pixtral (vision).

Medium 3 bridges the gap, offering a powerful commercial solution that’s more capable than “Small” models but more cost-effective than “Large” ones. It succeeds the older “Mistral Medium” and signifies Mistral’s commitment to rapidly iterating and enhancing its offerings for the enterprise market. The company also teased that “something ‘large'” is coming soon, hinting at further developments.

Frequently Asked Questions (FAQ) about Mistral Medium 3

-

What is Mistral Medium 3?

It’s a new multimodal large language model from Mistral AI, released around May 7, 2025, designed to offer state-of-the-art performance, especially in coding and multimodal tasks, at a highly competitive cost for enterprise applications. -

What are the key features of Mistral Medium 3?

Key features include multimodal understanding (processing text and images), high performance in coding and function-calling, enterprise-focused deployment options (API, cloud, self-hosted), and a strategic balance of performance, cost, and deployability. -

How does Mistral Medium 3 compare to models like Claude 3 Sonnet?

Mistral AI claims Medium 3 performs at or above 90% of Anthropic’s Claude 3 Sonnet (or a potential “Sonnet 3.7”) on various benchmarks, while being significantly more cost-effective. -

What is the pricing for Mistral Medium 3?

It’s priced at $0.40 per million input tokens and $20.80 per million output tokens via API. -

Is Mistral Medium 3 open source?

No, Mistral Medium 3 is a commercial model accessible primarily via API or managed enterprise deployments. This contrasts with some of Mistral AI’s other open-weight models. -

What are the main use cases for Mistral Medium 3?

It’s suited for software development, agentic workflows, multimodal applications (analyzing text and images), content generation, data extraction, and various enterprise AI solutions, particularly in sectors like finance, energy, and healthcare. -

What does “multimodal” mean for Mistral Medium 3?

It means the model can process and understand information from multiple types of data, specifically reported to include text and images, allowing for more complex and versatile applications.

Conclusion: Is Mistral Medium 3 the AI Breakthrough Enterprises Awaited?

Mistral Medium 3 enters the AI arena with a bold proposition: top-tier intelligence without the top-tier price tag. Its claimed prowess in coding and multimodal understanding, coupled with flexible enterprise deployment and a disruptive cost structure, makes it a highly compelling offering. Mistral AI is clearly targeting businesses eager to leverage advanced AI but constrained by the operational costs of flagship models.

The “Generation 3” label and the promise of “SOTA performance at 8X lower cost” are significant. If independently validated, Mistral Medium 3 could indeed be a game-changer, democratizing access to powerful AI and compelling competitors to rethink their own pricing and value propositions.

However, the current information landscape, reliant on secondary sources and initial company claims due to the (fictional) inaccessibility of a direct announcement, necessitates a degree of watchful optimism. The AI community and potential enterprise adopters will eagerly await more transparent technical details, comprehensive third-party benchmarks, and real-world case studies.

What stands out is Mistral AI’s aggressive strategy. They are not just building models; they are engineering value propositions. If Mistral Medium 3 delivers on its multifaceted promise, it won’t just be another powerful LLM; it could mark a pivotal shift in how enterprises access and deploy artificial intelligence.